本文最后更新于 2026年1月9日 晚上

欧拉-丸山(Euler-Maruyama)法

wiki百科上的词条

Euler法(ODE):

dtdx=a(x(t))

有迭代格式

x(t+Δt)=x(t)+a(x(t))Δt

丸山法(SDE):

dx=f(x,t)dt+g(t)dW

有迭代格式

x(t+Δt)=x(t)+f(x,t)Δt+g(t)ΔW

文中将上述迭代格式写为

xi+1=xi+f(x,t)Δt+g(t)ΔtZ

简化为

xi+1=xi+f(x,t)+g(t)Z

DDPM与SMLD在SDE视角下的大一统

Forward process:

dx=f(x,t)dt+g(t)dW

Backward process:

dx=[f(x,t)−g2(t)sθ(t)]dt+g(t)dw

DDPM

DDPM回忆与转化

回忆加噪过程

xi=1−βixi−1+βi ϵ,ϵ∼N(0,1),i=1,…,N

定义如下变量:

x(t=Ni)=xi,β(t=Ni)=Nβi,Δt=N1,t∈[0,1]

于是,

x(t+Δt)(Taylor 展开)(取极限)=1−Nβ(t+Δt)x(t)+Nβ(t+Δt) ϵ=1−β(t+Δt)Δtx(t)+β(t+Δt)Δt ϵ≈(1−21β(t+Δt)Δt)x(t)+β(t+Δt)Δt ϵ=(1−21β(t)Δt)x(t)+β(t)Δt ϵ

移项得

x(t+Δt)−x(t)=−21β(t)Δtx(t)+β(t)Δt ϵ

得到 DDPM 对应的SDE,

dx=−21β(t)x(t)dt+β(t) dW

与Ito过程SDE的对应项:

- f(x,t)=−21β(t)x(t)

- g(t)=β(t)

逆过程的表达式为:

dx=[−21β(t)x(t)−β(t)sθ(t)]dt+β(t)dw

回忆DDPM中的

xt=αtx0+1−αt ϵ ⇔ ϵ=1−αtxt−αtx0

因此,可以得到如下关系:

∇xtlogp(xt∣x0)=−∇xt2σt2(xt−μt)2=−σt2xt−μt=−1−αtxt−αtx0=−1−αtϵθ=sθ

DDPM采样过程的迭代格式转化为score function的形式:

xt−1=αt1(xt−1−αˉt1−αtϵθ)+σtZ=1−β(t)1(x(t)−1−αtϵθβ(t))+β(t)Z=1−β(t)1(x(t)+sθ(t)β(t))+β(t)Z

对DDPM的逆向过程使用Euler-Maruyama法,将具体函数代入迭代格式中,

xi=xi+1−f(x,t)+g2(t)sθ(t)+g(t)Z=xi+1+21βi+1xi+1+βi+1sθ(t)+βi+1Z≈xi+1+21βi+1xi+1+βi+1sθ(t)+21βi+12sθ+βi+1Z≈(1+21βi+1+o(βi+1))(xi+1+βi+1sθ)+βi+1Z≈1−βi+11(xi+1+βi+1sθ)+βi+1Z

此即DDPM的采样过程。第三步是因为βi+1→0,第四步是为了凑Taylor展开。

SMLD

考虑原论文的采样过程,

⇒⇒⇒⇒xi+1=xi+σi+12−σi2 ϵixi+1=xi−1+σi+12−σi2 ϵi+σi2−σi−12 ϵi−1xi+1=xi−1+σi+12−σi−12 ϵi′⋯xi+1=x0+σi+12−σ02 ϵ

于是,有迭代格式

x(t+Δt)=x(t)+σ(t+Δt)2−σ(t)2 Z=x(t)+Δtσ(t+Δt)2−σ(t)2 Δt Z

令 Δt→0,得到 SMLD 对应的SDE,

dx=dtdσ2(t) dW

与Ito process前向过程的SDE对应项:

- f(x,t)=0

- g(t)=dtdσ2(t)

相应的反向ODE为,

dx=−dtdσ2(t)sθ(t)dt+dtdσ2(t)dw

仿照前文,对SMLD的采样过程使用Euler-Maruyama法,将具体函数代入迭代格式中,

xi=xi+1−f(x,t)+g2(t) sθ(t)+g(t)Z=xi+1+(σi+12−σi2) sθ(t)+σi+12−σi2 Z

此即SMLD的采样过程。

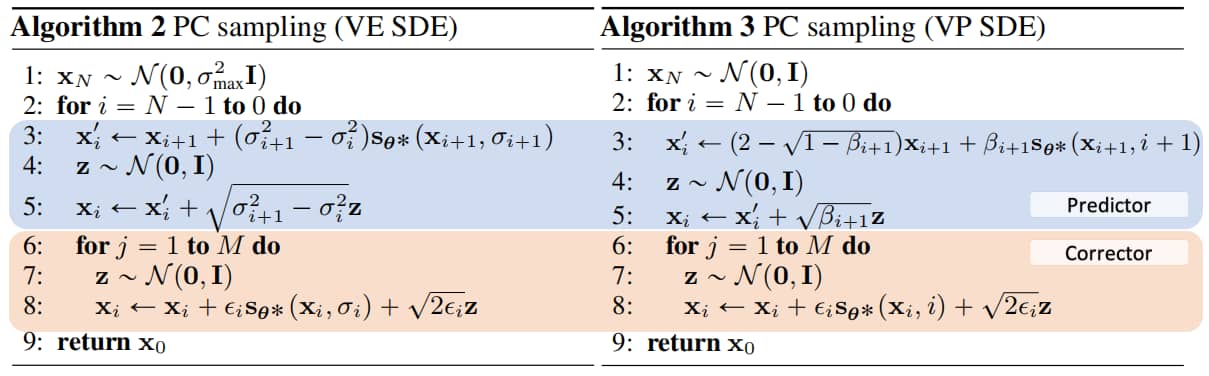

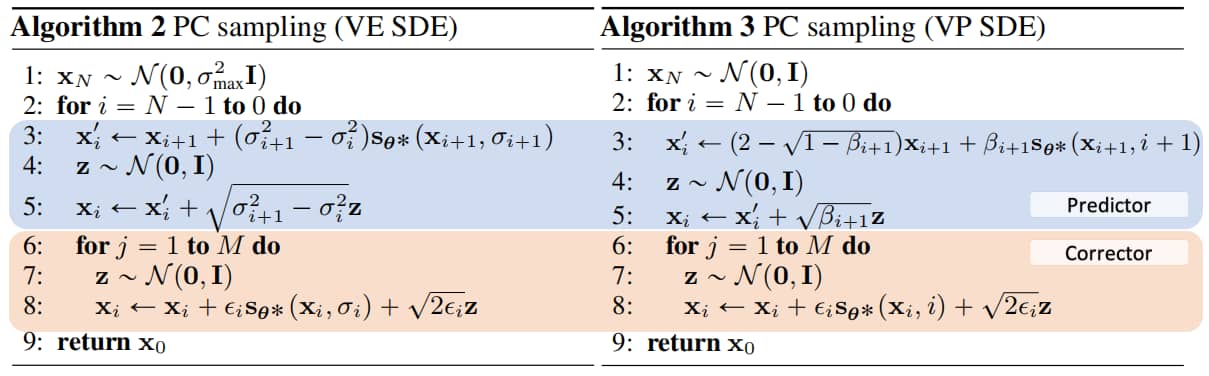

预测-校正采样法

预测-校正(Predictor-Corrector sampling, PC)

预测器(predictor)可采用任何固定离散化策略的反向时间随机微分方程数值求解器,校正器(corrector)可采用任何基于分数的马尔可夫链蒙特卡洛方法(score-based MCMC approach)。

算法

算法

概率流ODE

Probability Flow ODE

边缘分布的等价性证明

概率流常微分方程(ODE)的思想受到Maoutsa等人(2020)的启发,其中可找到一个简化情况的推导。下面我们将推导式(17)中完全一般化的ODE。

考虑式(15)中的随机微分方程(SDE),其形式如下:

dx=f(x,t)dt+G(x,t)dw

其中

- f(⋅,t):Rd→Rd

- G(⋅,t):Rd→Rd×d

边缘概率密度 pt(x(t)) 根据 Kolmogorov 前向方程(Fokker-Planck方程)<sup><a href="#ref1">[1]</a></sup> 演化:

∂t∂pt(x)=−i=1∑d∂xi∂[fi(x,t)pt(x)]+21i=1∑dj=1∑d∂xi∂xj∂2[k=1∑dGik(x,t)Gjk(x,t)pt(x)]

我们可将上式重写为,

∂t∂pt(x)=−i=1∑d∂xi∂[fi(x,t)pt(x)]+21i=1∑dj=1∑d∂xi∂xj∂2[k=1∑dGik(x,t)Gjk(x,t)pt(x)]=−i=1∑d∂xi∂[fi(x,t)pt(x)]+21i=1∑d∂xi∂[j=1∑d∂xj∂[k=1∑dGik(x,t)Gjk(x,t)pt(x)]](1)

注意到,

==j=1∑d∂xj∂[k=1∑dGik(x,t)Gjk(x,t)pt(x)]j=1∑d∂xj∂[k=1∑dGik(x,t)Gjk(x,t)]pt(x)+j=1∑dk=1∑dGik(x,t)Gjk(x,t)pt(x)∂xj∂logpt(x)pt(x) ∇⋅[G(x,t)G(x,t)⊤]+pt(x)G(x,t)G(x,t)⊤∇xlogpt(x)

基于此,我们可继续重写式,

∂t∂pt(x)=−i=1∑d∂xi∂[fi(x,t)pt(x)]+21i=1∑d∂xi∂[j=1∑d∂xj∂[k=1∑dGik(x,t)Gjk(x,t)pt(x)]]=−i=1∑d∂xi∂[fi(x,t)pt(x)]+21i=1∑d∂xi∂[pt(x)∇⋅[G(x,t)G(x,t)⊤]+pt(x)G(x,t)G(x,t)⊤∇xlogpt(x)]=−i=1∑d∂xi∂{fi(x,t)pt(x)−21[∇⋅[G(x,t)G(x,t)⊤]+G(x,t)G(x,t)⊤∇xlogpt(x)]pt(x)}=−i=1∑d∂xi∂[f~i(x,t)pt(x)]

其中我们定义:

f~(x,t):=f(x,t)−21∇⋅[G(x,t)G(x,t)⊤]−21G(x,t)G(x,t)⊤∇xlogpt(x)(2)

观察式 (2) 可知,它等价于以下 SDE 的 Kolmogorov 前向方程(此时该方程也称为 Liouville 方程),其中 G~(x,t):=0:

dx=f~(x,t)dt+G~(x,t)dw

这本质上是一个常微分方程:

dx=f~(x,t)dt

与式(17)给出的概率流ODE一致。因此,我们证明了概率流ODE(式17)与式(15)中的SDE具有相同的边缘概率密度 pt(x)。

这是一段需要引用文献的内容,

另一段引用<sup><a href="#ref2">2</a></sup>。

参考文献

-

[1] Stochastic differential equations

Bernt Øksendal. In Stochastic differential equations, pp. 65–84. Springer, 2003.

-

[2] 霍金 S. 时间简史[M]. bantam, 2005.